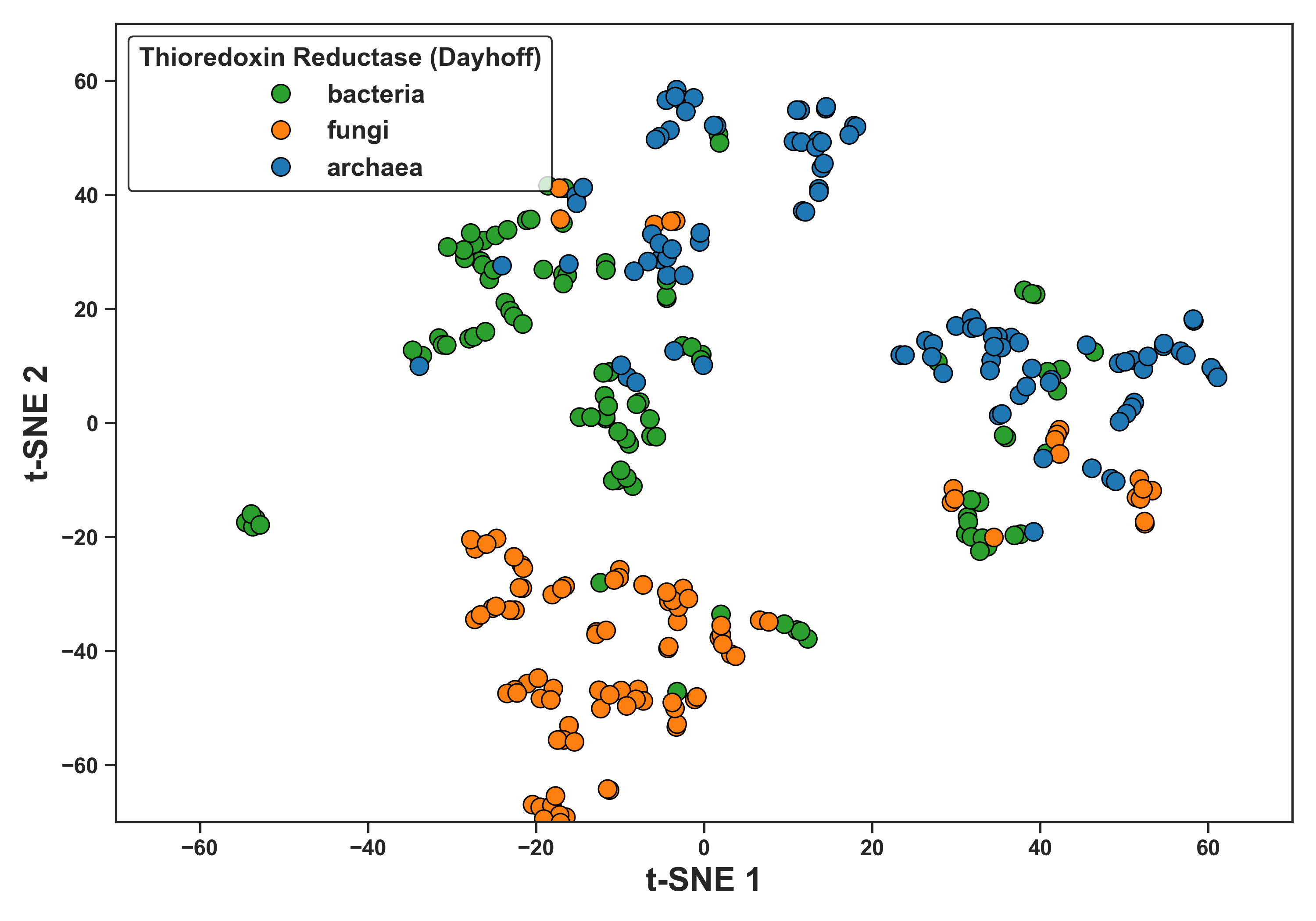

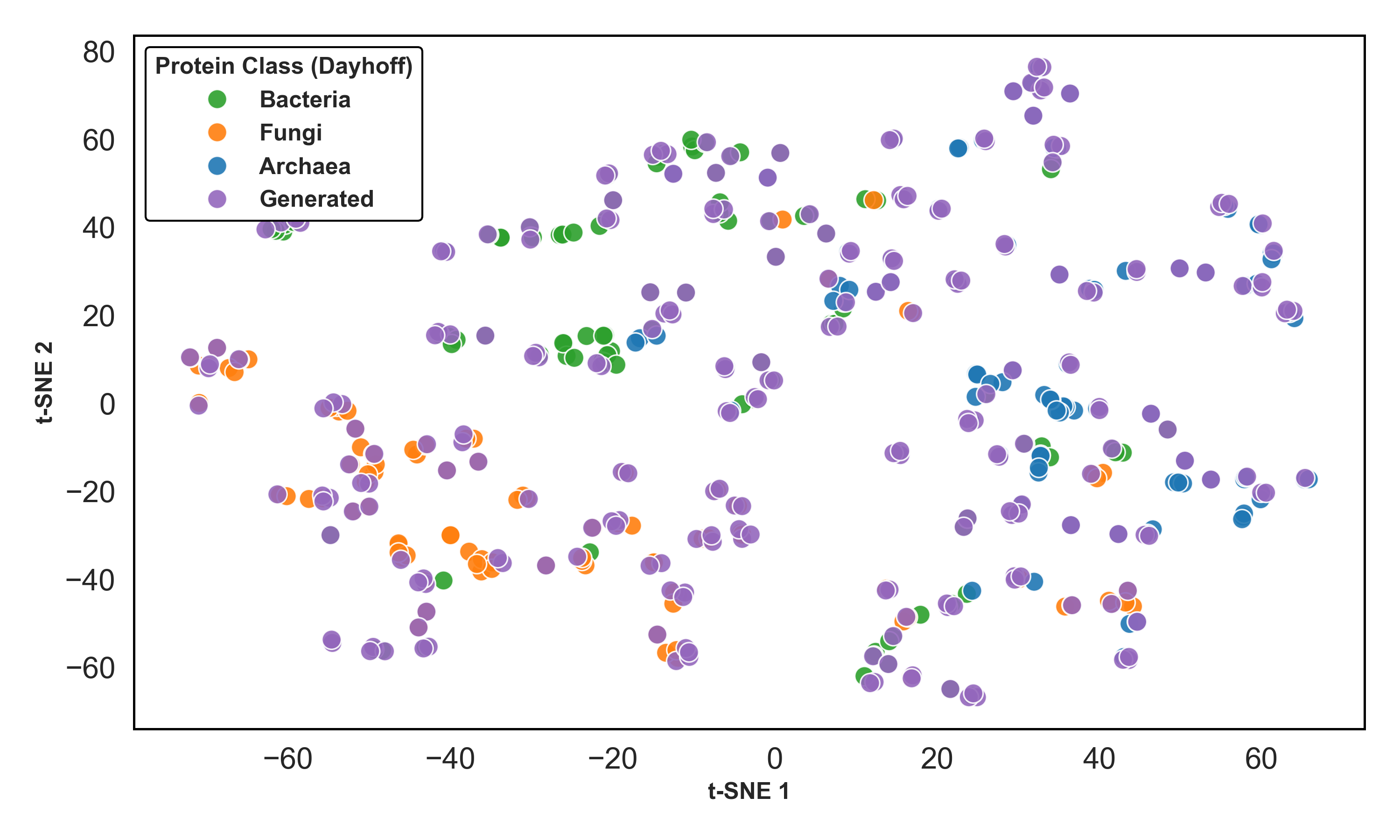

Figure 1: t-SNE by Domain (Dayhoff)

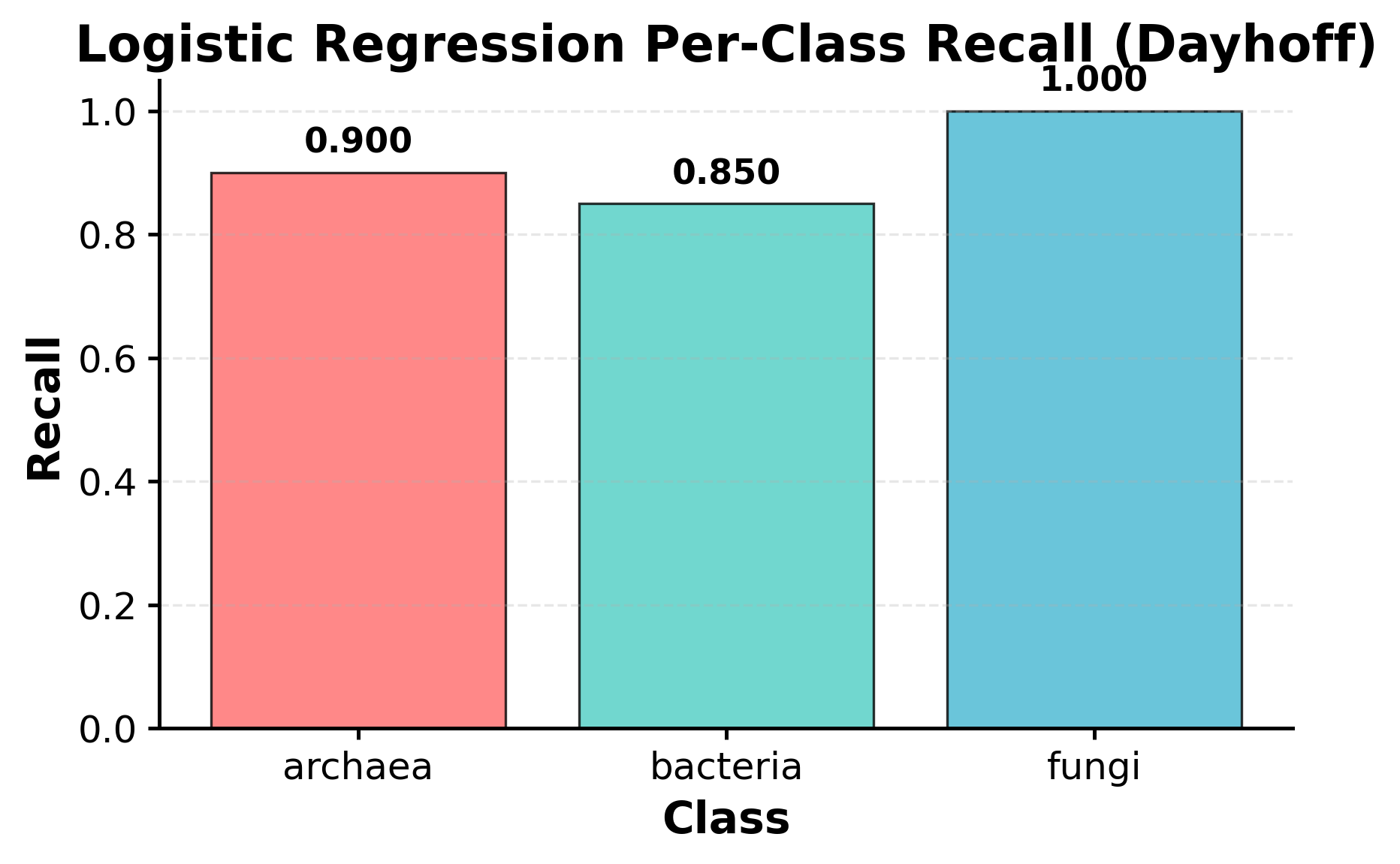

Figure 2: Logistic Regression Per-Class Recall (Dayhoff)

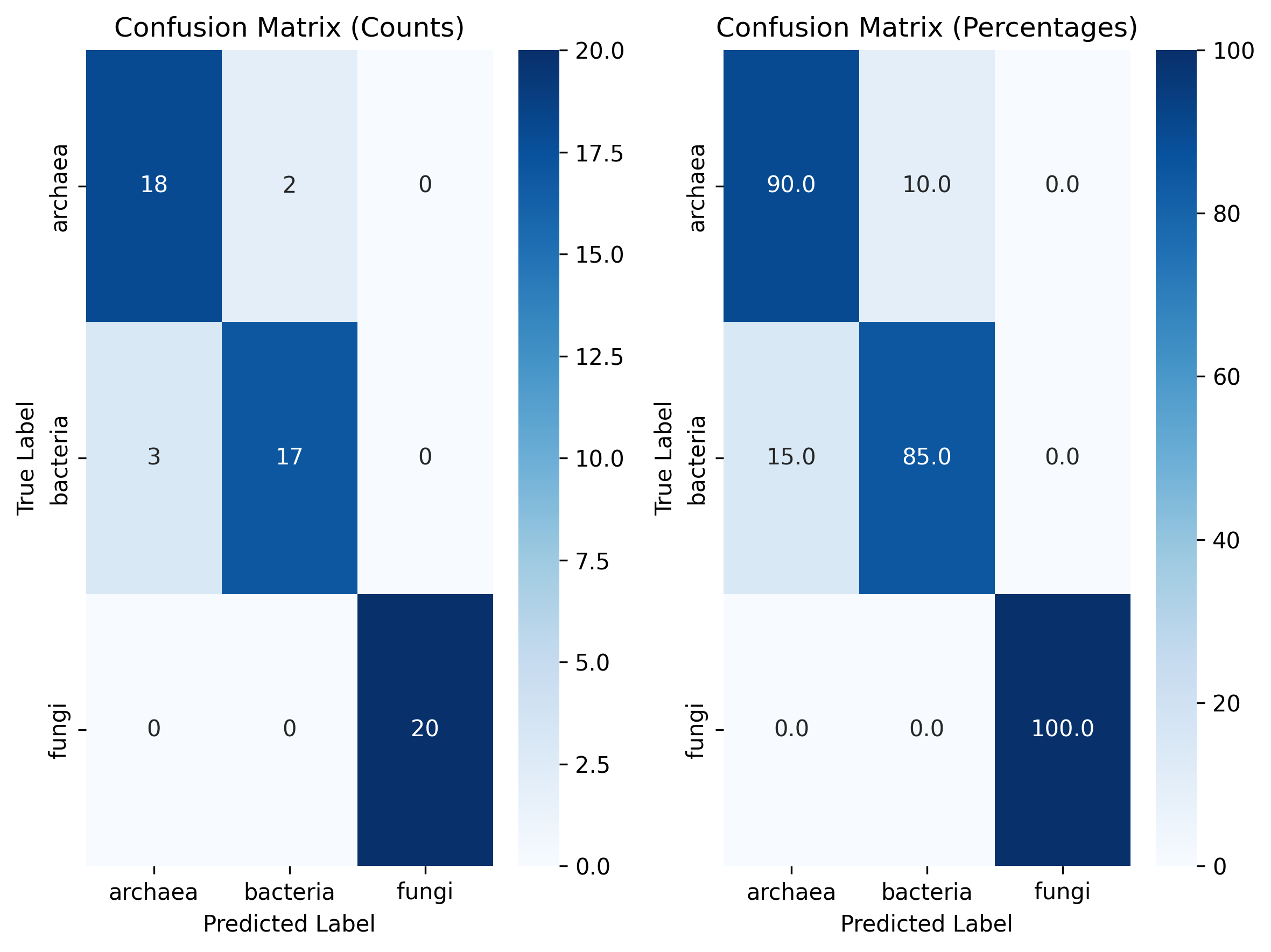

Figure 3: Logistic Regression Confusion Matrix (Dayhoff)

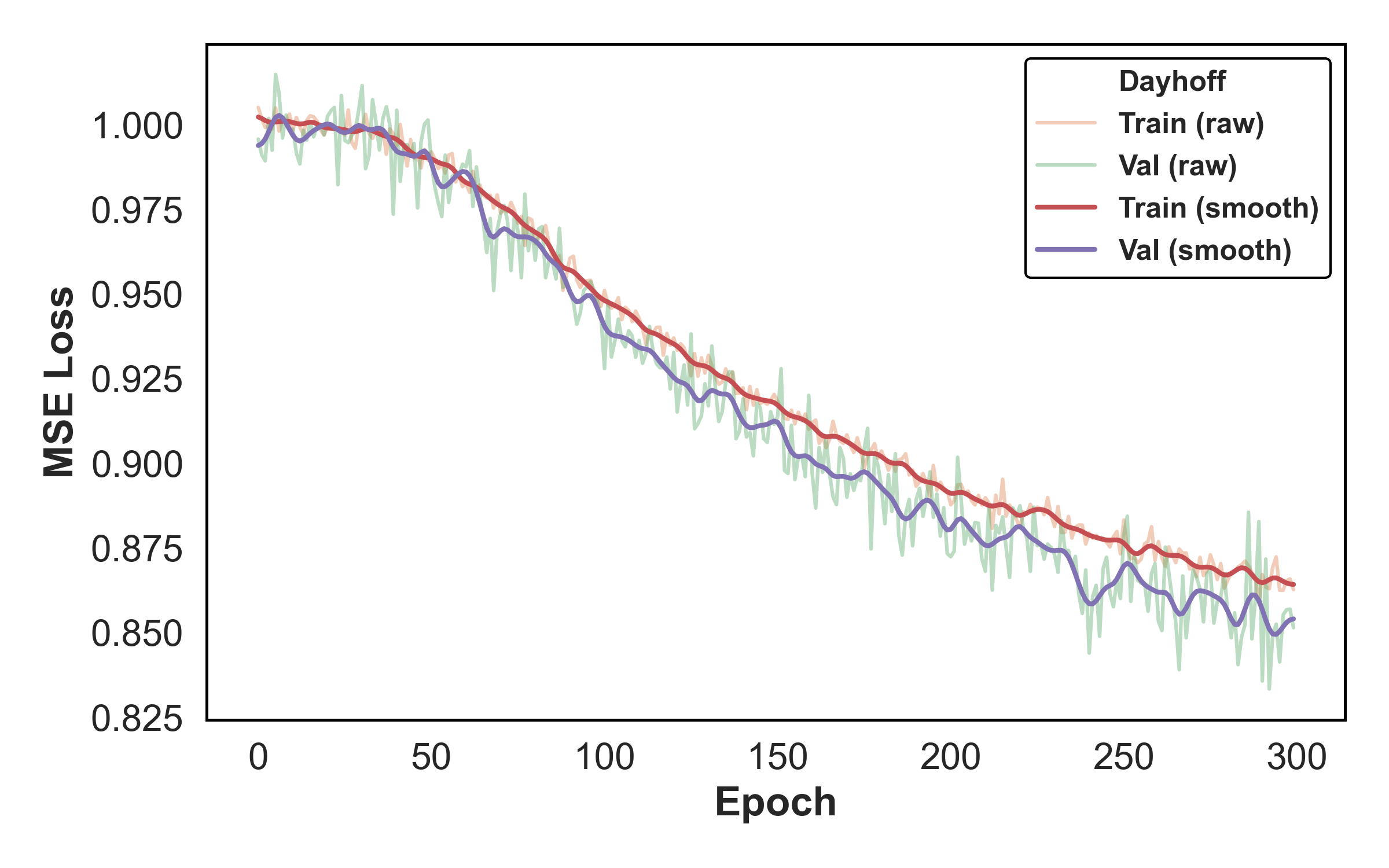

Figure 4: Diffusion Training Loss (Dayhoff)

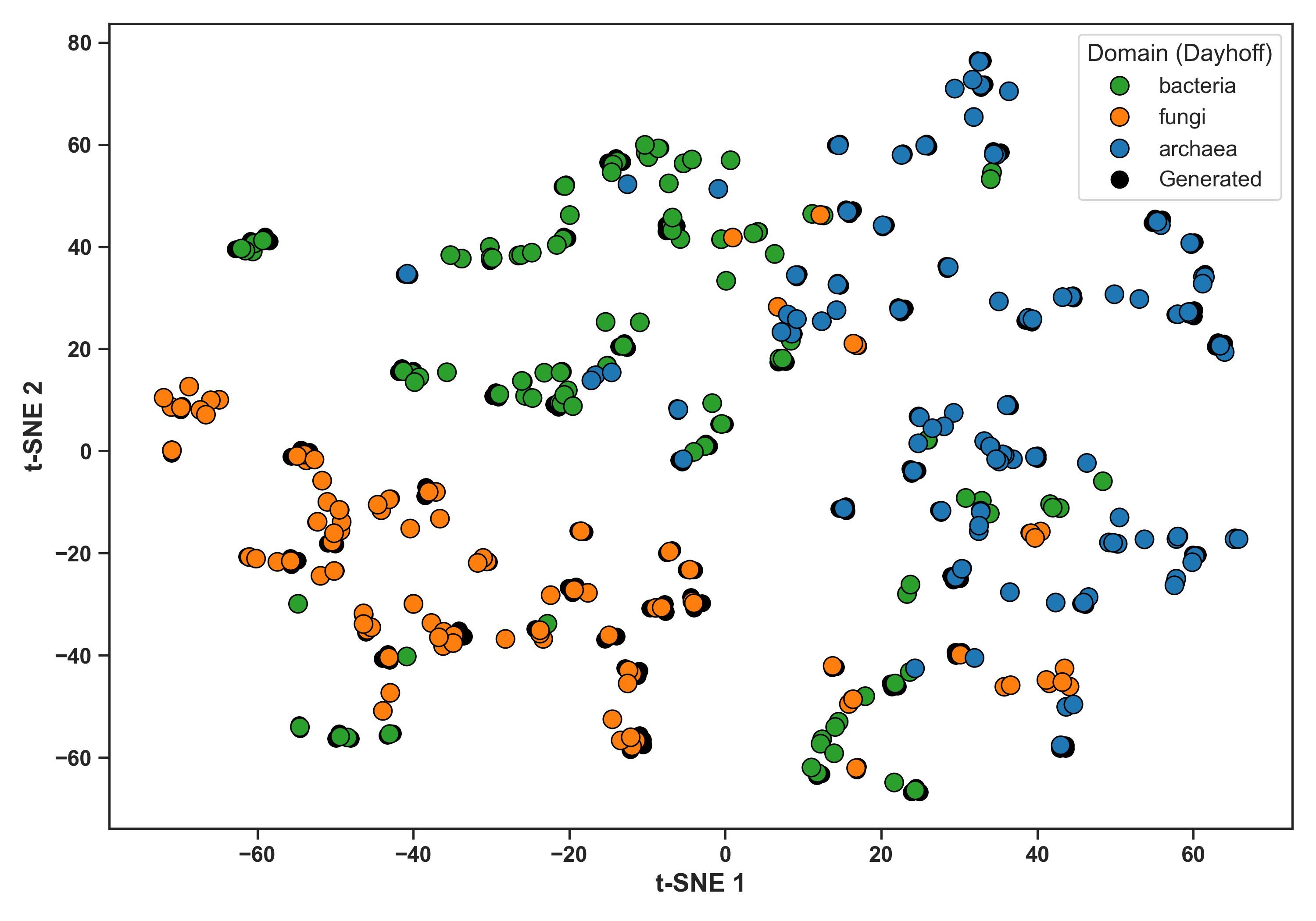

Figure 5: Generated Embeddings t-SNE (Dayhoff)

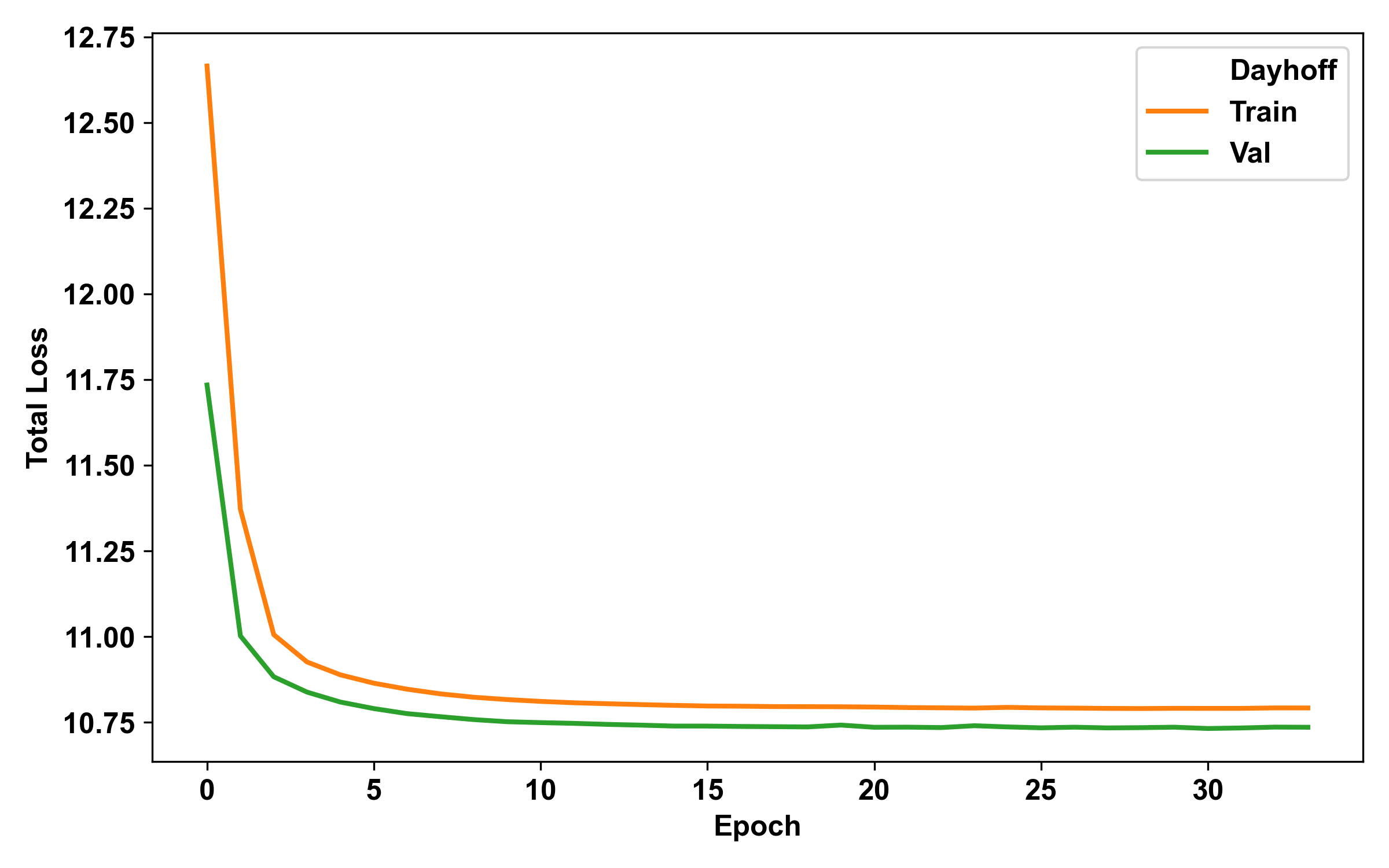

Figure 6: Transformer Decoder Loss (Dayhoff)

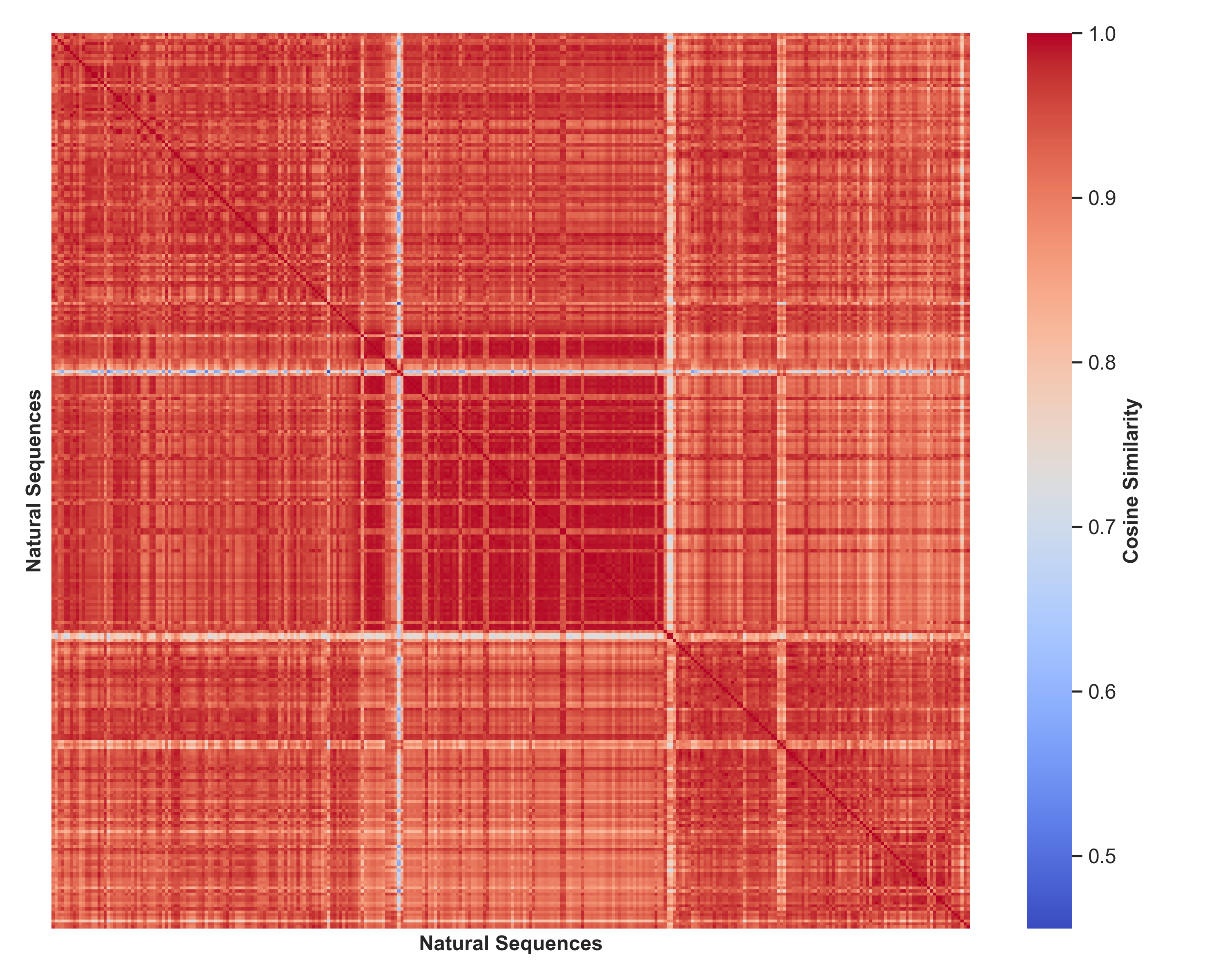

Figure 7: Real-Real Cosine Similarity (Dayhoff)

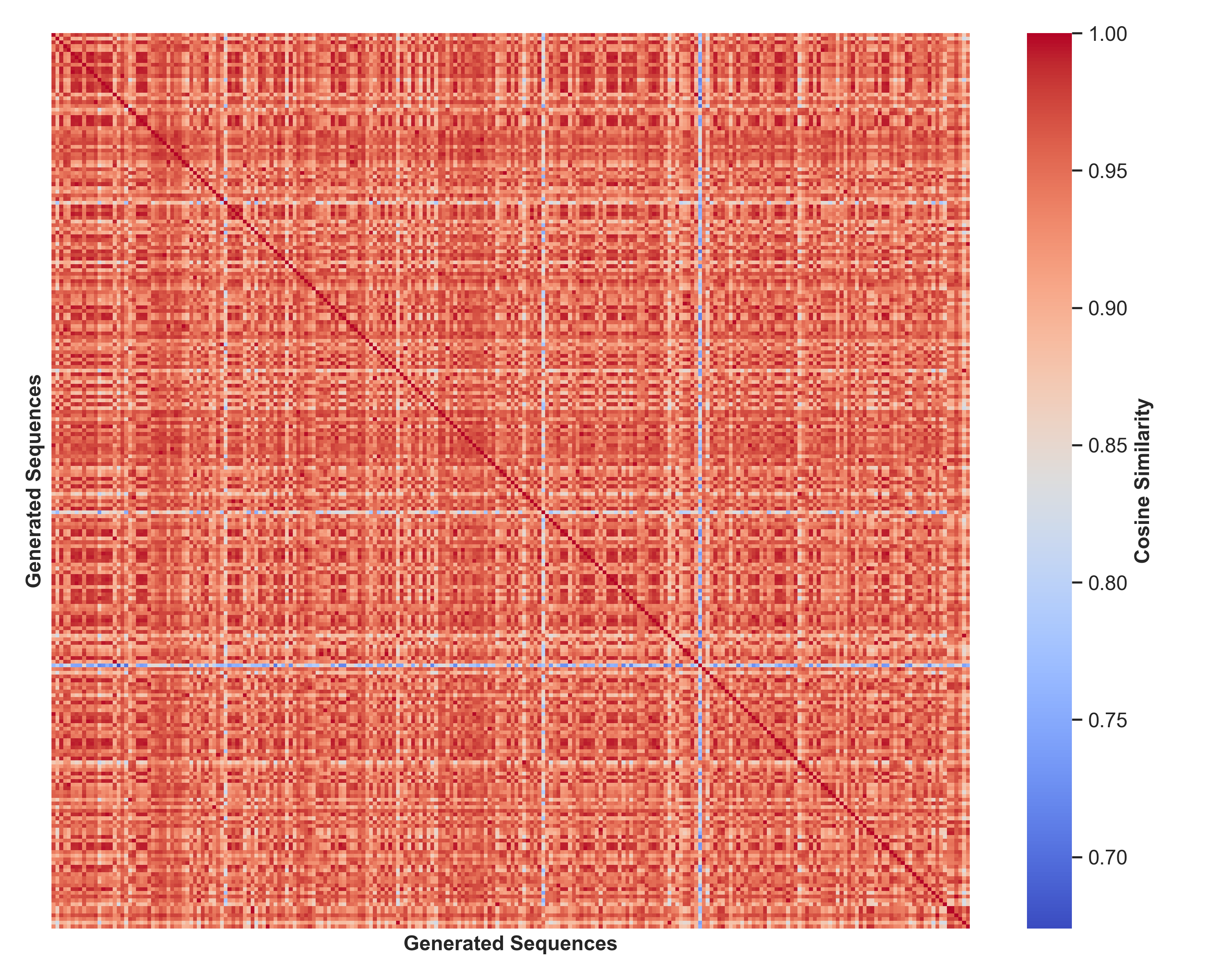

Figure 8: Generated-Generated Cosine Similarity (Dayhoff)

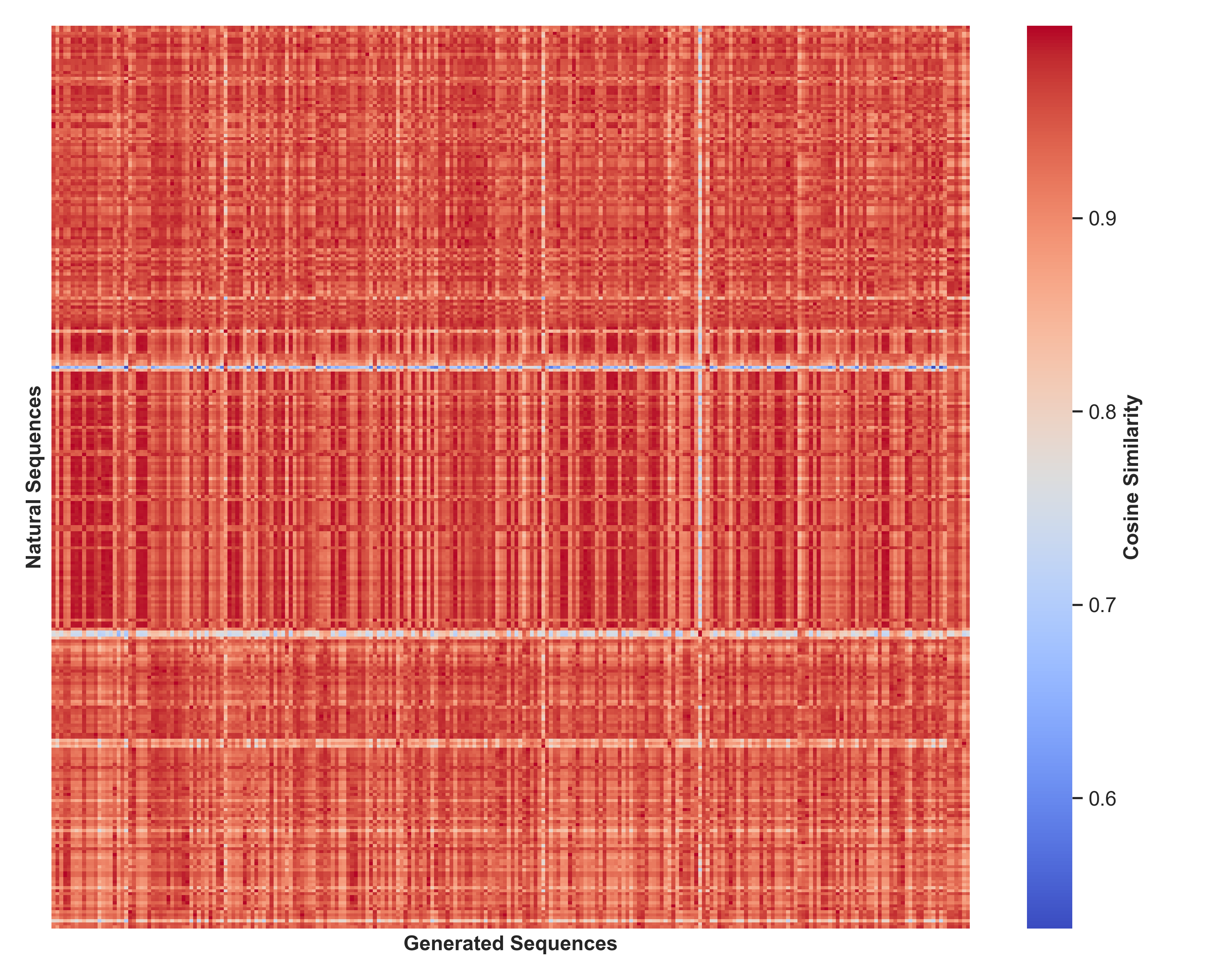

Figure 9: Real-Generated Cosine Similarity (Dayhoff)

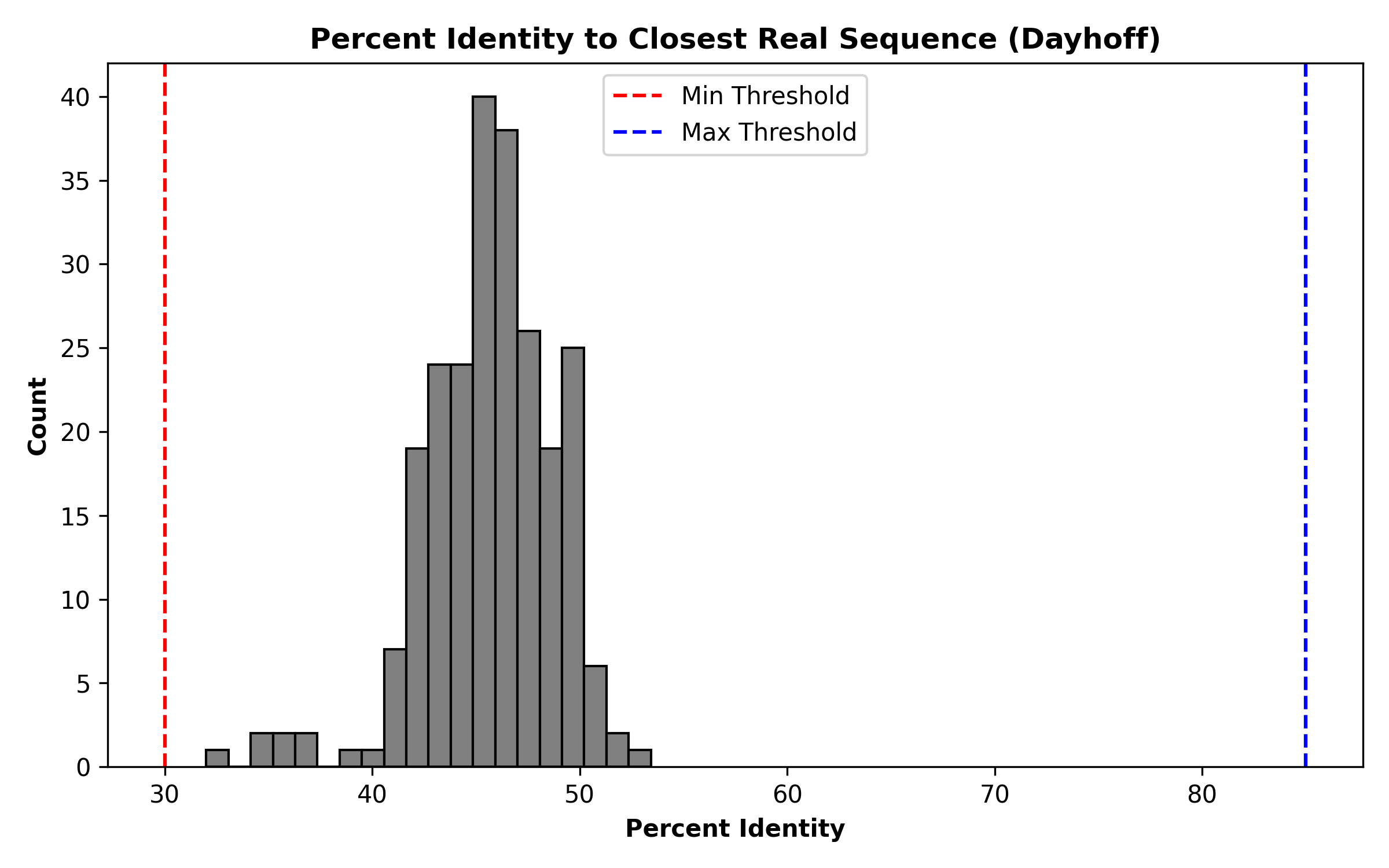

Figure 10: Identity Histogram (Dayhoff)

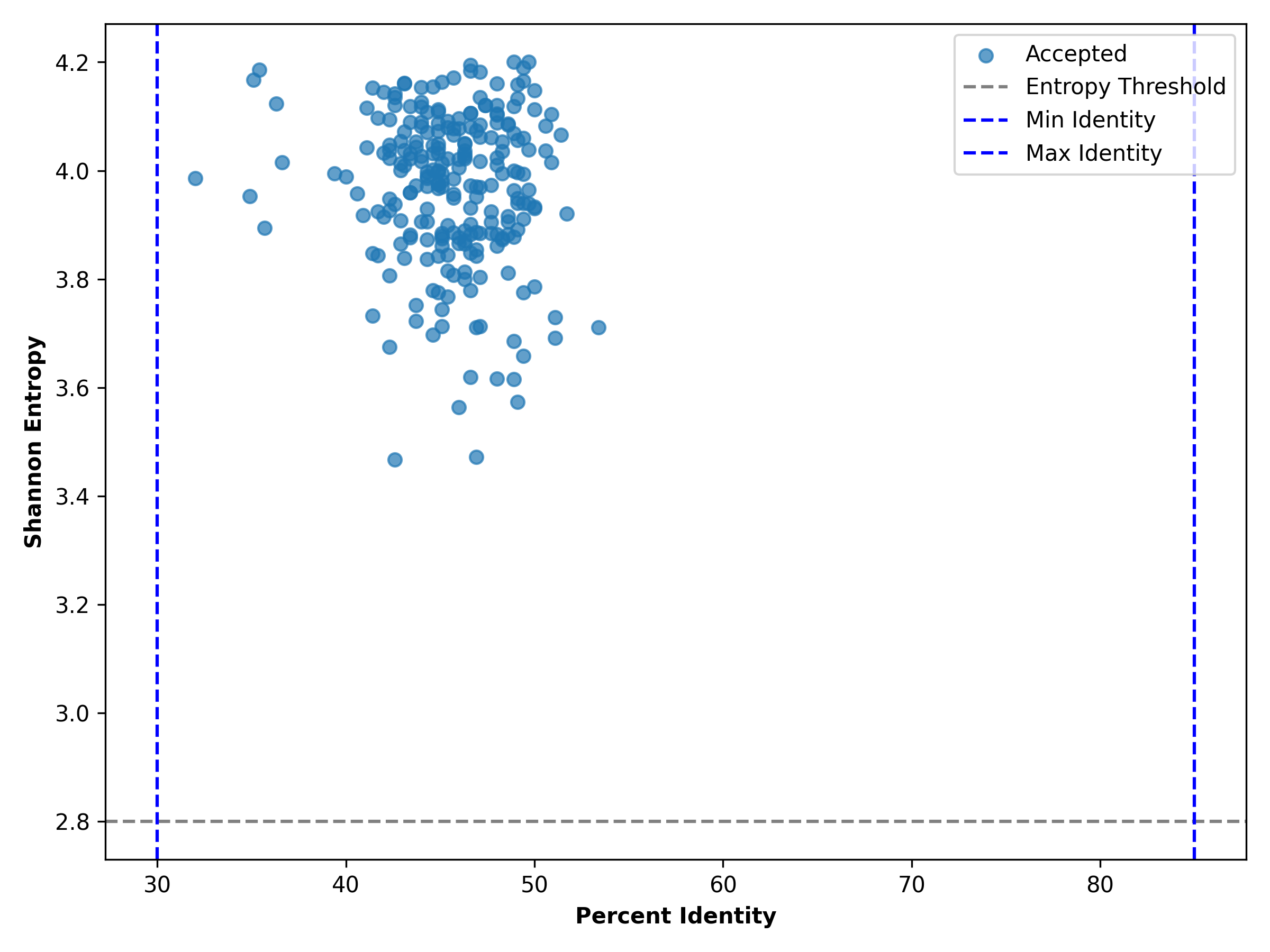

Figure 11: Entropy vs Identity Scatter (Dayhoff)

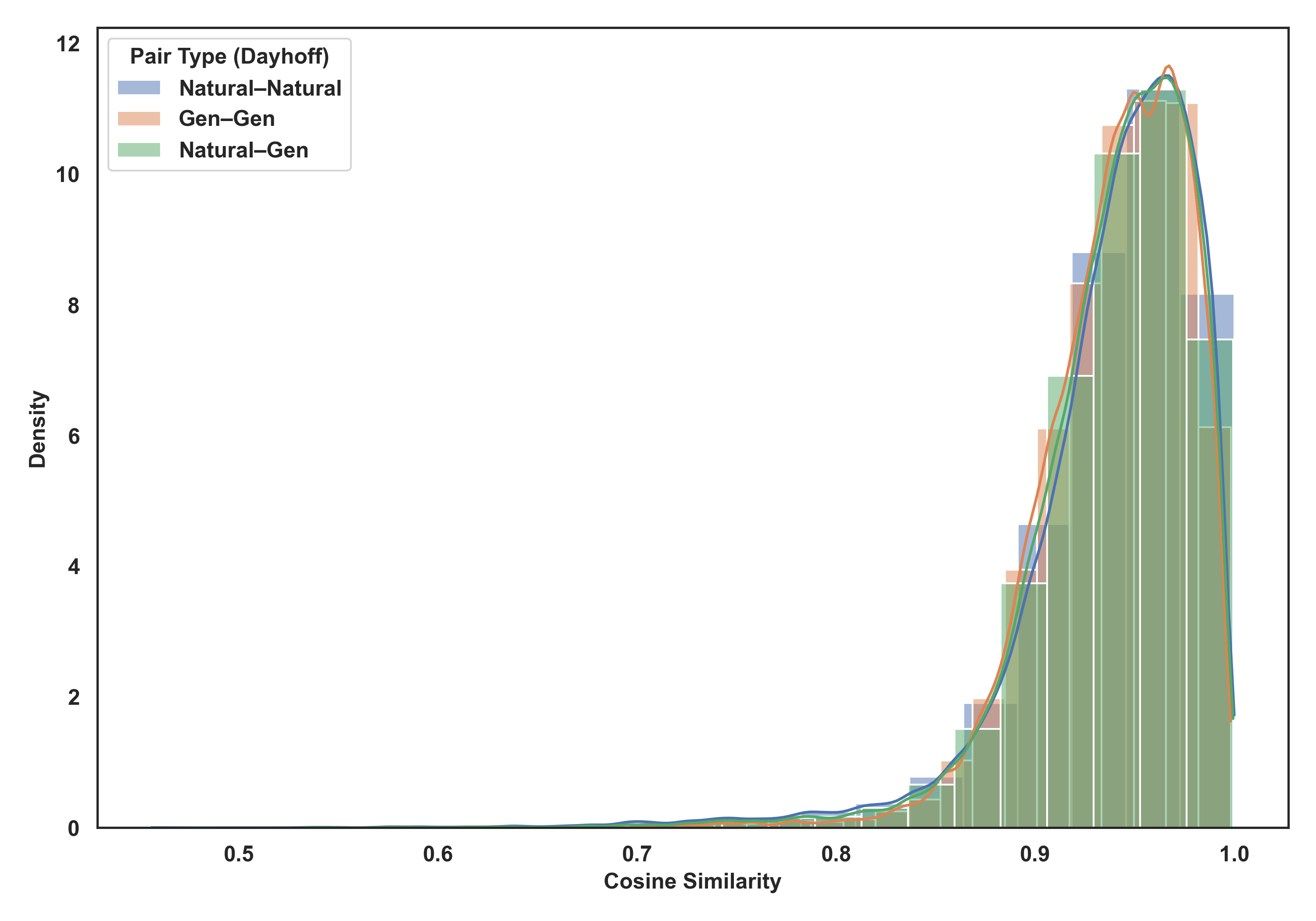

Figure 12: All Histograms (Dayhoff)

Figure 13: t-SNE Domain Overlay (Dayhoff)

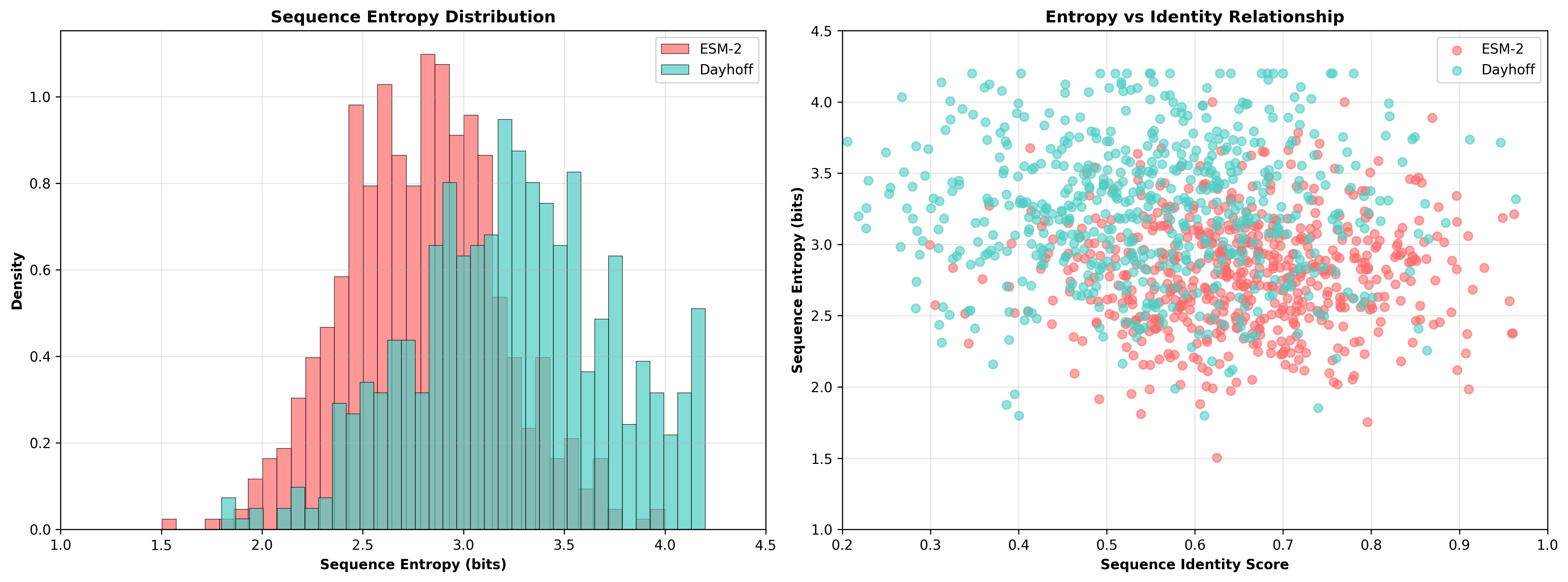

Figure 14: Entropy Overlay Comparison (ESM-2 vs Dayhoff)

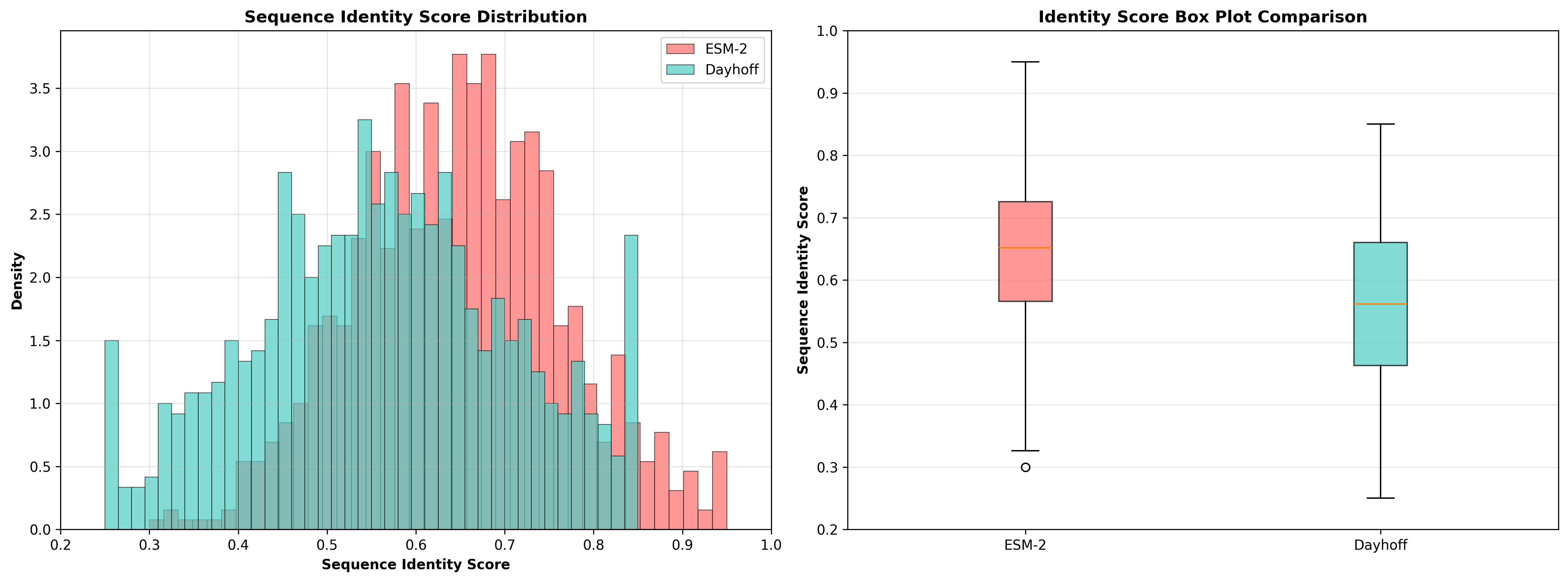

Figure 15: Identity Score Overlay Comparison (ESM-2 vs Dayhoff)

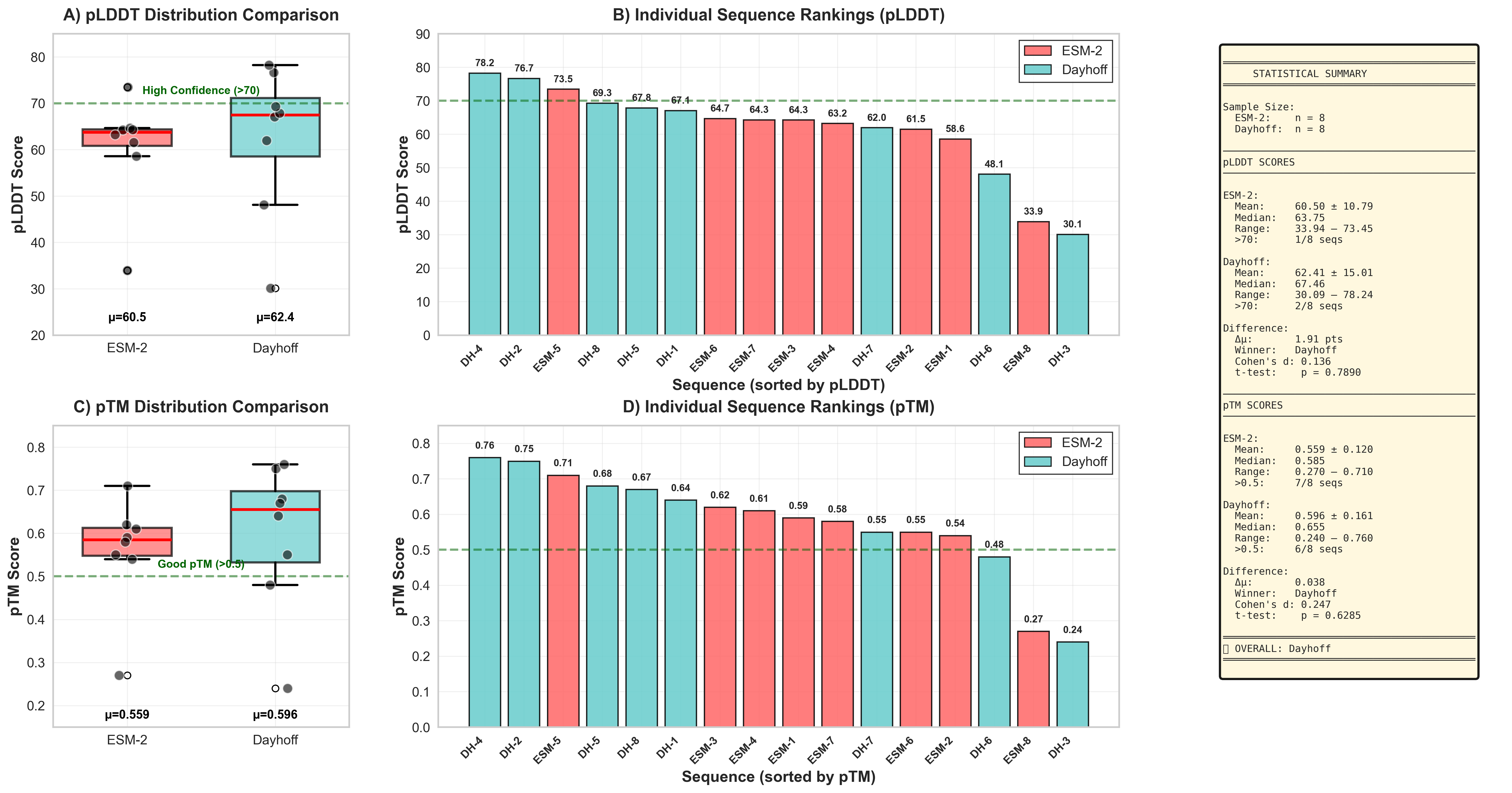

Figure 16: AlphaFold2 Structural Validation (ESM-2 vs Dayhoff)

Key Findings:

• Dayhoff shows slightly higher mean pLDDT (62.41 vs 60.50) and pTM (0.596 vs 0.559)

• 25% vs 12.5% high-confidence sequences (pLDDT > 70)

• Differences are not statistically significant (p > 0.05)

• Both models produce comparable structural quality, validating Dayhoff as viable alternative

• Dayhoff shows slightly higher mean pLDDT (62.41 vs 60.50) and pTM (0.596 vs 0.559)

• 25% vs 12.5% high-confidence sequences (pLDDT > 70)

• Differences are not statistically significant (p > 0.05)

• Both models produce comparable structural quality, validating Dayhoff as viable alternative